Popular plagiarism detection companies are cracking down on sneaky students ‘gaming the system’ with ChatGPT, but experts urge that AI is here to help.

Generative AI (increasingly being referred to now as GenAI) concerned academics and administrators in 2022 with its ability to conjure up complete essays out of thin air and churn out at least semi-accurate content from a single sentence prompt.

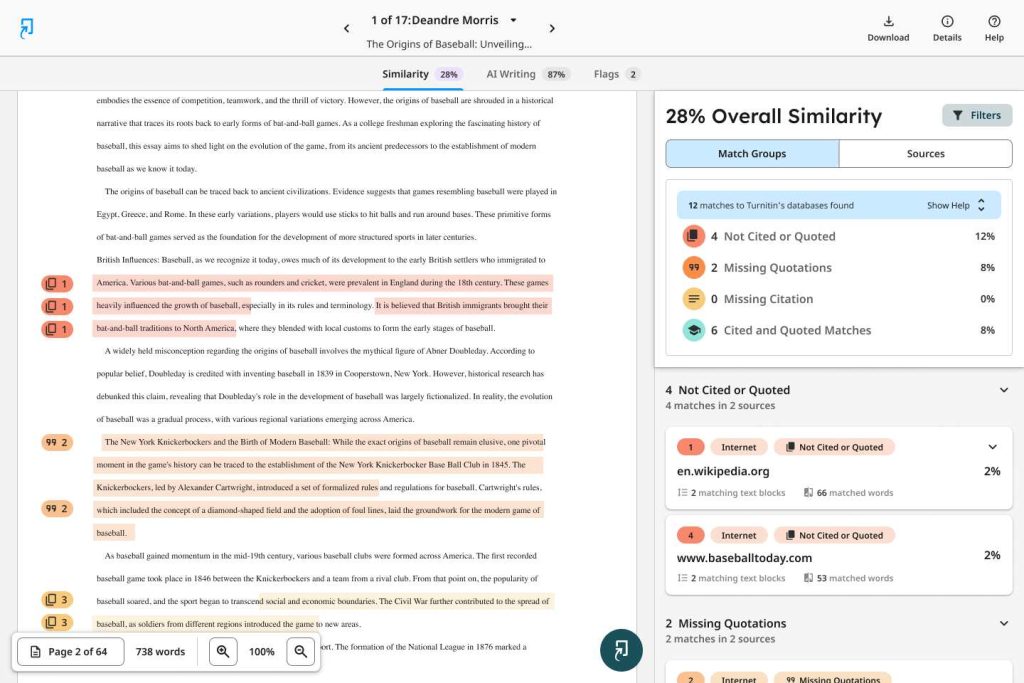

In an effort to catch out the crafty kids using the generative tool to sidestep assessment demands, companies like Turnitin spent a huge chunk of 2023’s school year developing their own AI detection software, helping teachers sift through essay pieces from across the globe in search of disingenuous work.

Thanks to the way these Large Language Models (LLMs) and their artificial ‘brains’ piece together content from millions of articles, essays and academic assignments, Turnitin’s regional vice-president James Thorley says the AI “fingerprint” was relatively easy to spot.

“Ultimately, LLM’s work in a predictive fashion. They are always looking for the next most likely word in a sentence, which is vastly greater than any human can have,” he told EducationDaily.

“Based on what they’ve already read, they’re making an assessment of ‘ok, the next word of the sentence – what is it most likely to be?'”

“Humans write in a completely different way. We do not think word by word by word. We think in a much more random way. It’s this difference in predictability that allows us to predict what is and isn’t AI-generated.”

Fast-forward over a year after the launch of the company’s Similarity Report detection software, and the figures are staggering. Of the 200 million pieces put under the microscope, 22 million students turned in assessments that included at least 20 per cent AI-generated writing.

For three per cent of that cohort (or six million students), that number was as high as 80 per cent.

Catching students in the cheating act

Concerning, but I’m not exactly surprised. Eight years ago, I would’ve jumped at the chance to get my computer to write a two-thousand-word analysis of Shakespeare’s (exceedingly dull) Romeo and Juliet.

But if schools are catching them in the act, no harm, no foul, right? Well, despite the company boasting a less than one per cent AI detection error rate, Thorley says the software is playing a game of cat and mouse with students trying to find ways around the system.

“It’s never 100 per cent black and white, there are always grey areas in the margins,” he told EducationDaily.

“One of the things we have seen is sometimes students would be using an LLM and then using another AI tool to paraphrase the output of that LLM.

“So, one of the things we’ve recently done is release paraphrase detection, so that if we know the first paper is AI generated and we see that parts of it have been paraphrased using another tool, then we actually highlight that differently.”

But is there a better approach to AI?

With AI proving as popular as it is among students, it’s clear programs like ChatGPT aren’t going anywhere anytime soon. So, what can schools do to stop the software from interfering with student learning?

Well, when it comes to classroom learning, it could be out with the old and in with the new. Instead of fighting back against a seemingly insurmountable wave of AI-generated tools, Thorley says it is up to the teachers to embrace technology that will shape the modern workplace.

“One of the big changes that AI may bring when it comes specifically to assessment is the need to focus on the process. The need to focus on how the students got to the outcome,” he told EducationDaily.

“With an essay, everyone knows ChatGPT will spit you out an essay out in seconds, no matter what question you put in.

“It’s going to be more and more important I think to see the evidence and see the process the student went through to get to that essay instead of just the essay itself.”

This “human-centred” approach, as he coined it, would supposedly set students up for the real world, imbuing them with skills in AI tools and learning processes that are highly sought after in today’s job market.

But if AI is to become a mainstay of the Aussie education system, Thorley recognised that a greater emphasis would have to be placed on transparency around its use for both students and teachers.

“The key to academic integrity traditionally, in terms of thinking about plagiarism, is that you always have to say your sources,” he told EducationDaily.

“While AI is different…it’s almost more like contract cheating. Potentially, someone else is doing the work, you’re taking someone else’s previous ideas. It’s something that has come from somewhere else.

“But this core idea of always being transparent about what you have used to do the work I think is fundamental.

“It should always be declared that AI was used as part fo the work. If a teacher is using AI for something to do with markign an assessment, the key thing is to be transparent to the student.”

Utilising GenAI to enhance classroom learning

Despite the obvious risks to the integrity of the education system, Thorley is adamant that the adoption of GenAI as a tool to enhance classroom learning and free up some time for teachers will, ultimately, be a benefit across the board.

“Hopefully, AI will be able to free up time for teachers to focus on much more impactful work, as well as being able to serve this insight across a student or across a cohort that otherwise wouldn’t be able to be easily analysed,” he told EducationDaily.

“Our priority is to provide schools with a tool that works alongside the expertise of educators and academic policies and allows students to incorporate AI into their experiences in a transparent manner.

“This is crucial so that every learner has an early start in developing their skills, producing original work, and ultimately preparing them for their future careers.”